OCAD University

06/12/2023

06/02/2023

To OCAD U Donors,

I’m graduating from the undergraduate Digital Futures program at OCAD now. During my first year of school, I was studying computer science at Western University. I decided to transfer to OCAD in my second year because I felt that OCAD would give me more freedom to explore my interests in technology and art. I found that the school was unique in that I have many hands-on learning experiences, but also am pushed to think about the theoretical and cultural implications of my work. I am grateful to be graduating with a body of projects that reflects the activities I would like to pursue in the future.

My work explores visual communication, creative tools, and data in different forms. I follow where my curiosity takes me, and the things I make are a reflection of the ever-changing questions floating in my mind. Through my projects, I aim to try something new (at least, new to me), combine my interests across a range of fields, share a part of myself, and make people think. I am inspired by unconventional systems that set off change, the complexity of the natural world, and the evolution of both language and technology throughout history.

For my thesis project, I grew salt crystals on physical letters, turned it into a font, and then input the font into a machine learning model that outputs synthesized letters, resulting in a family of fonts. Because models are trained on existing digital fonts, I chose a source of data from the physical world. During GradEx, visitors interacted with the font through a speech-to-text website I built with OpenAI’s Whisper API. Through the combination of natural growth and man-made technology, my work explores the role of design tools to creative outcomes, challenges the way we communicate through digital fonts, and questions what it means to make and break the rules of generativity in the process of human-led creation.

Outside of the classroom, I have interned at Addepar, Cisco, and RBC, where I designed enterprise software, conducted research studies, and built tooling for design systems in the financial and cybersecurity sectors. I have also spent many weekends at hackathons, as a participant during my first year, and a mentor in my fourth year. Collaborating with people that have a range of skillsets and backgrounds has taught me a lot about teamwork, diversity, and the value of different perspectives. During my time as a student, I have come to value peers that care about the quality of their work and motivate me to have higher standards. I like working with people who enjoy trying new ideas and asking meaningful questions.

After graduation, I am looking forward to moving to New York in July to work full time as a product designer at Addepar, working on financial applications. I also have many ideas for side projects related to art and technology that I plan to continue. Thank you for the recognition and the donation towards my awards, which will go towards supporting my independent art practice. I feel encouraged as I graduate and start an exciting path in my career.

05/06/2023

At the end of the year, OCAD has this exhibition of graduating students' work, called GradEx. My final project, Crystal type, is about making fonts by growing crystals and inputting the letters into a machine learning model. For GradEx, I set up a space where people could interact with the font.

04/25/2023

My project explores the role of design tools to creative outcomes through applications of data science in font design, and challenges the way we communicate through digital typefaces.

It begins with an organic phenomenon and develops through a series of experimentations with man-made tools. I was inspired by the formation of salt crystals to grow my own using physical letters. These letters were then brought into the digital realm, where I played with creating my own font, using a machine learning model to produce characters given reference input glyphs, and displaying the font on an interactive website using OpenAI's speech-to-text Whisper API.

04/05/2023

Thesis notes from September 21, 2022 to April 5, 2023.

12/10/2022

This project combines typography and prompt-based image generation through building a tool for "decorating" text, powered by Stable Diffusion’s Image-to-Image pipeline, using Hugging Face Spaces, Python, and Gradio.

It receives a text, font size, and prompt. An image is created from the text and font size, which is sent to the model alongside the prompt, producing an output that is a mixture of both the initial letterforms and prompt image. With my text decorator, I made a series of uppercase characters from the Latin alphabet, using prompts based on each individual letter.

12/08/2022

Generative typefaces are a recent development in the history of typography, made possible by the advent of digital technology, to harness the power of computers as collaborators. At its core, generative type is about using rule-based systems to form glyphs, which typically involves the use of algorithms and software to carry out a number of steps.

Although this description could be used to talk about any digital font, what differentiates generative typefaces from the everyday digital font, is that they use computational methods in a creative manner, often involving data that is dynamic instead of static. This relegates substantial aspects of glyph design solely to algorithms predefined by the designer, and in many cases, relies on external data coming from neither the designer nor the computer. Generative faces can also fall under a larger classification of experimental typefaces.

11/07/2022

Like many Chinese characters, ‘加’ has its origins in agriculture. As technology developed and popular Chinese culture shifted away from a farming-centric society, language evolved along with the changes across communities. The need for literal representation in written communication faded, and abstractions replaced them. Thus, the original meaning of ‘加’ was ‘to add strength’, however, today, the meaning has evolved to mean ‘to add’ in the general sense of increasing.

07/02/2022

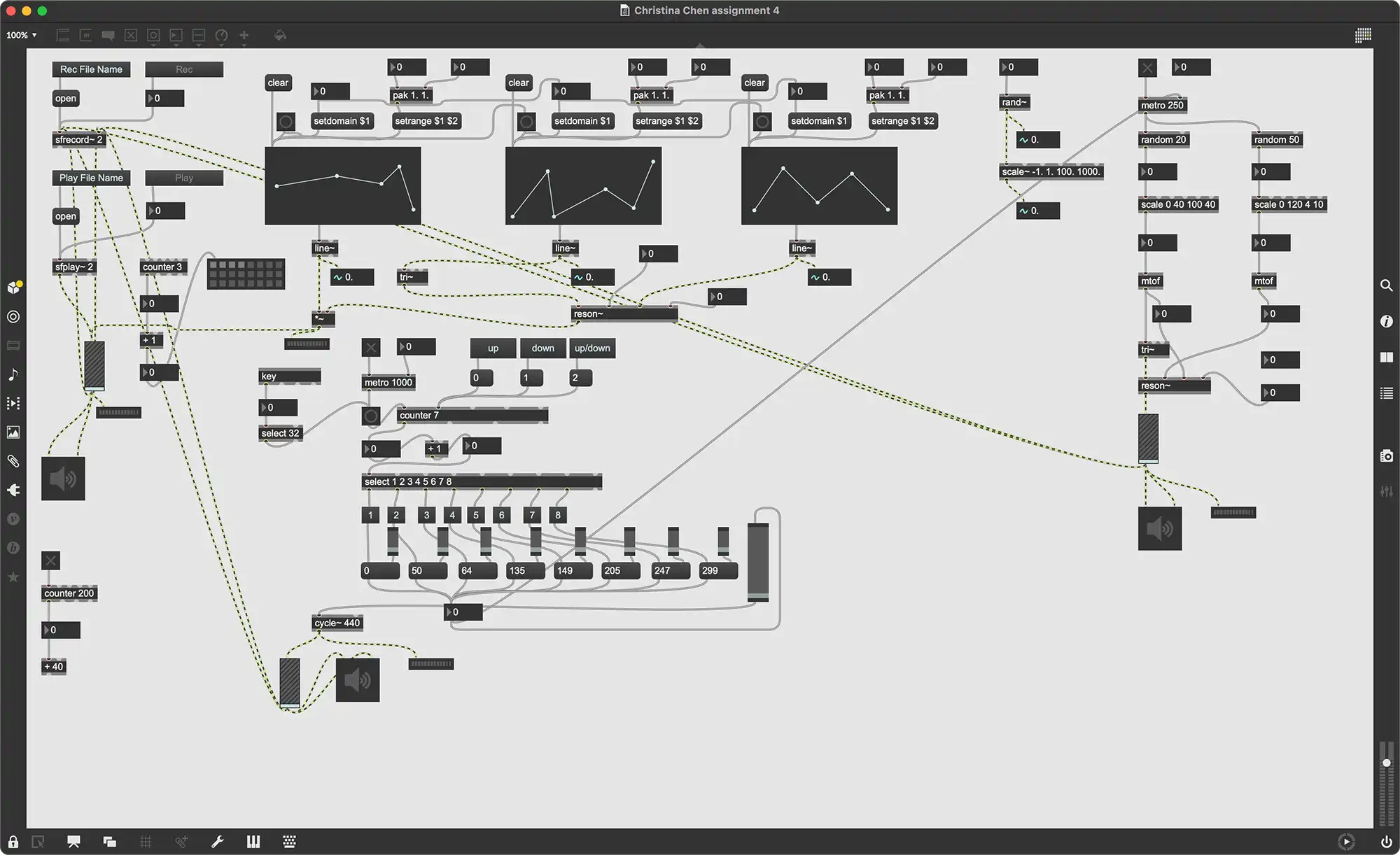

Electroacoustics.

05/16/2022

05/03/2022

My Atelier project is a decentralized group saving app based on the concept of ROSCAs, which are rotating savings and credit associations. It's a way of saving money that is more common in developing countries, where people have limited access to formal financial institutions. Usually there is a fixed group of participants who regularly contribute sums of money to a pot, and then the total balance in the pot is allocated to one group member based on some type of schedule, lottery, auction or agreed upon rules. This is really useful for people who don't have enough money to make a big purchase or business investment or something like that. And this way every member of the community will be able to have that chance.

But with these ROSCAs usually, the community needs to rely on a central figure to distribute the money. There can be a lack of trust, like what if this person just runs off with the money or they take a bit for themself? With smart contracts and blockchain technology, there is a way to make this system decentralized, so instead of relying on a person or group to execute this transaction, we can write some code that executes it, so that everyone can see what's happening.

04/20/2022

Dimple Sans is a sans-serif text typeface which takes inspiration from the distinct stroke widths of traditional serif typefaces, utility of condensed type, and the slight whimsicality of inktraps, designed by Christina Chen in 2022.

04/16/2022

I collected a dataset containing 699 black and white logos and used StyleGAN2 to train a model based on this dataset. I ran two cases and ended up with different results.

12/23/2021

I first got the idea for my project after reading an article talking about this park in Japan that was asking visitors to limit screaming on rollercoasters to prevent the spread of COVID-19. They even made a promotional video where the president of the park was filmed wearing a mask and riding a rollercoaster, to prove that it was possible to go on a ride without screaming. The final frame of the video reads 絶叫は心中ので which translates to, Please scream inside your heart.

I also just have a special place in my heart for amusement parks, because I worked at Canada's Wonderland for a long time. Coasters is a diner I spent two years at, before moving to a buffet inside the park. I was interested in creating a visualization about theme parks and looking into how the pandemic affected operations. I found data about park performance on Wikipedia and the Themed Entertainment Association's 2020 annual report on Global Attractions.

12/16/2021

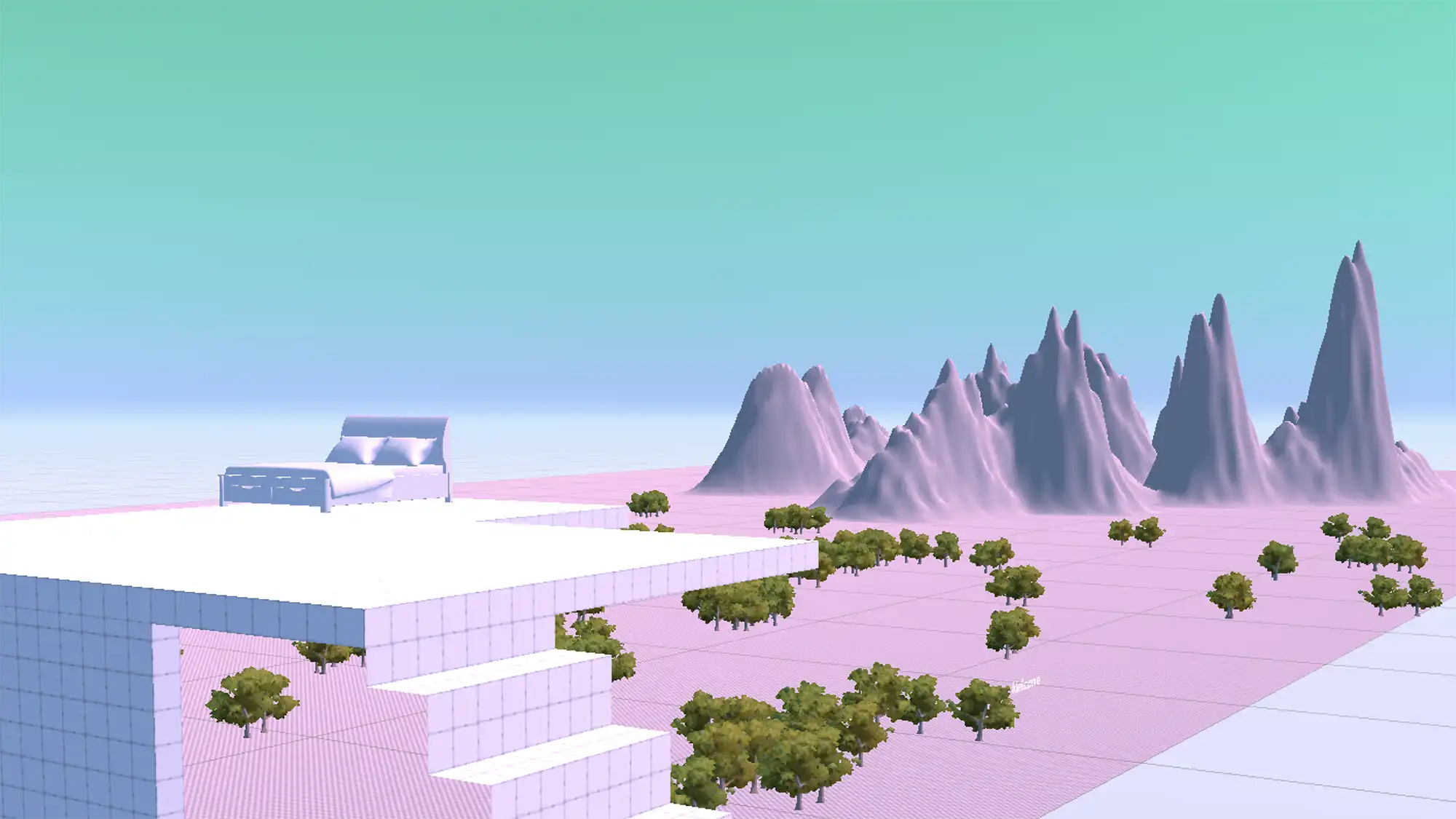

The average person spends about a third of their entire life sleeping. I have always found the topic of sleep very interesting, because it takes up so much of our life, and has such a big impact on the time we spend being awake. For several months now, I have been tracking my time across various activities such as sleep, work, school, and more, so that I can make better decisions around how I spend my time. I use Toggl Track for this.

11/02/2021

I grew up on the Internet. It’s like a second home to me. My screen time is embarrassingly high, and these days I rely on apps to interact with my friends and family. So, when I saw the Statistics Canada dataset titled “Selected online activities by gender, age group and highest certificate, diploma or degree completed," I was immediately drawn.

Something else that stood out to me was that the data was fairly recent. Results came from the 2020 Canadian Internet Use Survey, which had a data collection period from November 2020 to March 2021 with varying reference periods throughout the survey, including "current or regular use", "past month", "past three months" or "past 12 months" preceding the interview date. Since COVID-19 hit in March 2020, we’ve been staying inside for much longer than we’re used to, and the increase of participation in online activities has accelerated tremendously. I was pretty excited because this data would accurately reflect the state of Internet use during the pandemic.

My primary audience is Canadians from the age of 15-24. Because this group is online more often compared to the older age groups, I thought they might be more interested. But I still wanted to show information that all Internet users, regardless of age, would care to stop and look for a second.

04/08/2021

Introducing Dream Wear, the clothes of your dream. With a simple dream or thought, you can instantly project your favorite meme or design. And it’s easy to do – just wear the discreet Dream Wear neckband, and the band will instantly detect your thoughts pertaining to the Dream Wear device, and will move and change its projections accordingly. Dream Wear uses the latest in brain scanning technology and is able to absorb and scan 100 thoughts per minute with 99% accuracy using new state of the art brain wave information scanning technology. Dream Wear – wear your dreams.

03/12/2021

02/10/2021

12/15/2020

Building a UX design chatbot with AWS Amplify and Vue.js.

11/26/2020

11/12/2020

Amplifying human ability

As we’ve seen in the past few decades, the capacities of computers continue to develop and increase in power. We are getting closer to producing machines that are capable of individual thought, on par with that of humans. In some areas, we’ve already created machines that surpass the abilities of men. One of the most famous examples in history, are the Deep Blue vs. Garry Kasparov chess matches from 1996-1997. This marked the first time a computer defeated a world champion in a chess tournament.

I often hear people say things like, “When will AI take over the world?” and “Will AI replace my job?” The way I see it is, humans are in control. It’s our responsibility to govern and apply AI in producing solutions that benefit people. AI is a tool we can leverage, not an external, independent force. In a 1995 interview, Steve Jobs said, “Humans are tool builders, and we build tools that can dramatically amplify our innate human abilities...I believe that the computer will rank near, if not at the top, as history unfolds.” To me, AI is an incredibly influential tool that is driving the speed of innovation in our world.

The applications of AI in art and design are endless, really. Although not extensive, I’ve curated a list of what I think are some of the most promising and relevant use cases.

Web design and layouts The team behind Wix Advanced Design Intelligence (ADI) trained models using websites that were visually attractive, with high traffic and conversion rates. This was used to give users building websites on Wix, with suggestions to improve their designs. There are many other examples of companies using AI to generate layouts for a variety of mediums, such as slide decks, blogs, and app interfaces.

Creativity In the words of Brian Morris, a senior designer at Microsoft AI, “What happens when you randomly throw two datasets together and allow ML to find patterns?” Or, what happens when you input several images, videos, and text, and allow AI to compose a story or film? Can AI come up with ideas? A simple application, Artbreeder, allows users to generate infinitely random images, by mixing existing images together.

Personalization Perhaps one of the most commonly encountered applications of AI, our data is often used to train models, in turn providing us with more accurate recommendations. For example, platforms like Netflix and Spotify collect data surrounding the content we consume, in order to suggest media we’re more likely to enjoy. A benefit of personalization can include improved UX, making it easier for users to find what they’re looking for. Greater user satisfaction is typically aligned with business goals.

UI design tools AI is helping designers work more efficiently, and blurring the lines between design and development. Tools like Uizard utilize deep semi-supervised learning algorithms to generate functional prototypes from hand-drawn wireframes, thus significantly cutting down on time traditionally needed to create and test app ideas.

Image editing For years, AI has been essential to digital creators. The industry leading application, Adobe Photoshop, has been using AI to power tools like content-aware fill, spot-healing, and subject selection. Most recently, Adobe has been training generative adversarial networks (GANs) for the release of neural filters, a feature that focuses on facial retouching through skin smoothing, style transfer, smart portrait, and more.

Content generation AI has become an incredibly useful tool for us when it comes to generating content, whether it be copy, imagery, or other forms of media. One example is Alibaba’s AI copywriter, which uses deep learning, natural language processing, and millions of product descriptions, to generate smart copy for merchants to use on their platforms.

Personally, as a digital product designer who’s always looking to optimize my workflow, I’m most excited about advances in the area of UI design. I believe that solving for common design patterns, helps designers focus their energy on more unique challenges. The rise of models like GPT-3 empower us to turn ideas into functioning, testable products, by simply typing a sentence. Advances in tooling bridge the gap between design and engineering, resulting in smoother collaboration and faster production. I can’t wait to see how we use AI to make the world a better place.

11/02/2020

A hot dog is a sandwich, but it’s not a sandwich. The reason hot dogs don’t feel like sandwiches, is because humans have been trained to recognize and identify more traditional forms of sandwiches as such. Thus, hot dogs do not fit in the semantic category of sandwiches. However, because hot dog wieners are sandwiched between two ends of a bun, they are still sandwiches in a lexical sense. Hot dogs are sandwiches in the way that tacos, ice cream sandwiches, Oreo cookies, and three humans standing closely in a packed train, are sandwiches. Hot dogs are not sandwiches in the way that grilled cheeses, hamburgers, tortas, and banh mi are sandwiches.

10/22/2020

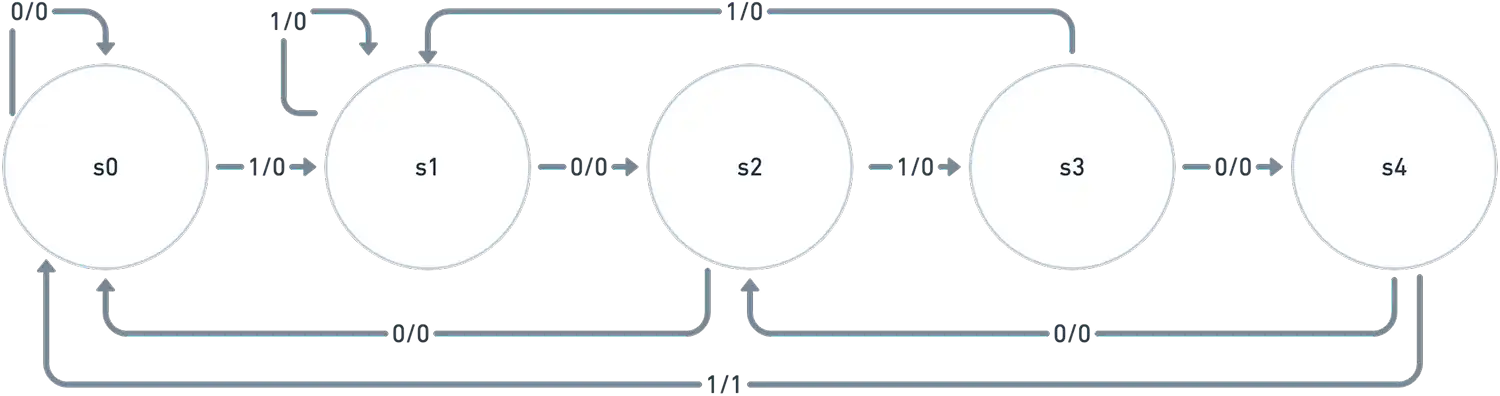

State machine I made in Whimsical.

10/17/2020

Continuing from my previous code, I played around with changing the number of lines, colours, and points. Instead of circles for points, I used stars and numbers using the current minute and second on the user’s computer from an array. I moved the for loop to the draw function and slowed the frame rate so that you can see each line appearing.

10/11/2020

10/07/2020

I chose to recreate Sol Lewitt’s Wall Drawing #118 in a digital format.

On a wall surface, any continuous stretch of wall, using a hard pencil, place fifty points at random. The points should be evenly distributed over the area of the wall. All of the points should be connected by straight lines.

10/05/2020

In the first drawing, I chose 0354's rule because it was fun to read.

- Draw any shape you want, but make sure it touches each edge of the paper.

- Draw a different shape inside of the first shape, and make sure it touches the edges of the first shape without overlapping.

- Repeat drawing shapes within the edges of the other shapes until you cannot fit any other shapes on the paper. You can draw shapes in the gaps between other shapes.

The second drawing was inspired by William Latham's FormSynth hand drawings and evolutionary systems. I thought it would be interesting to use abstract elements of an eye, because eyes are commonly used as an example of irreducible complexity, so there are theories out there about ways the eye could have been formed through evolution.

09/30/2020

My rule

- Start in the top left.

- Draw a line that is not straight.

- Beside that, copy the line and add another line to it. The addition of these lines together is what I refer to as a group.

- For every new group you make, copy it but add another line.

09/16/2020

There are 2 worlds that different players can go in. In each world, there are many swimming fish and a black hole. In one world, the fish are alive. In the other world, the fish are dead. You can drag the fish into a black hole and the fish will appear in the other world in a different state of living. Our inspiration was Handlining and Squid Jigging by the Food and Agriculture Organization of the United Nations.

09/09/2020

What apps are most important to you? How do you think they will change in the next 10 years?

The apps that are most important to me are tools that help me create, like Figma, Github, and Google Docs. And apps for communication and connecting with other people. In 2030, I think AI will be able to automate mundane tasks in creation, like GPT-3. As companies continue to collect our data, social media platforms will continue to get more personalized. I also think that in ten years, the use of voice technology for these types of applications will be more common.